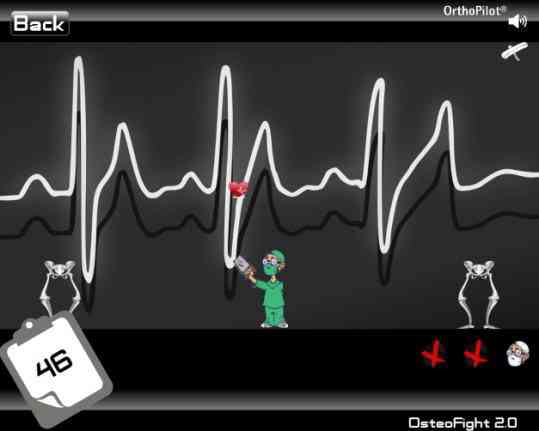

OSTEO FIGHT

In short

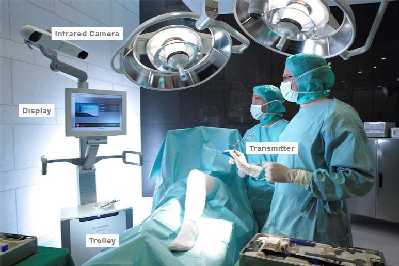

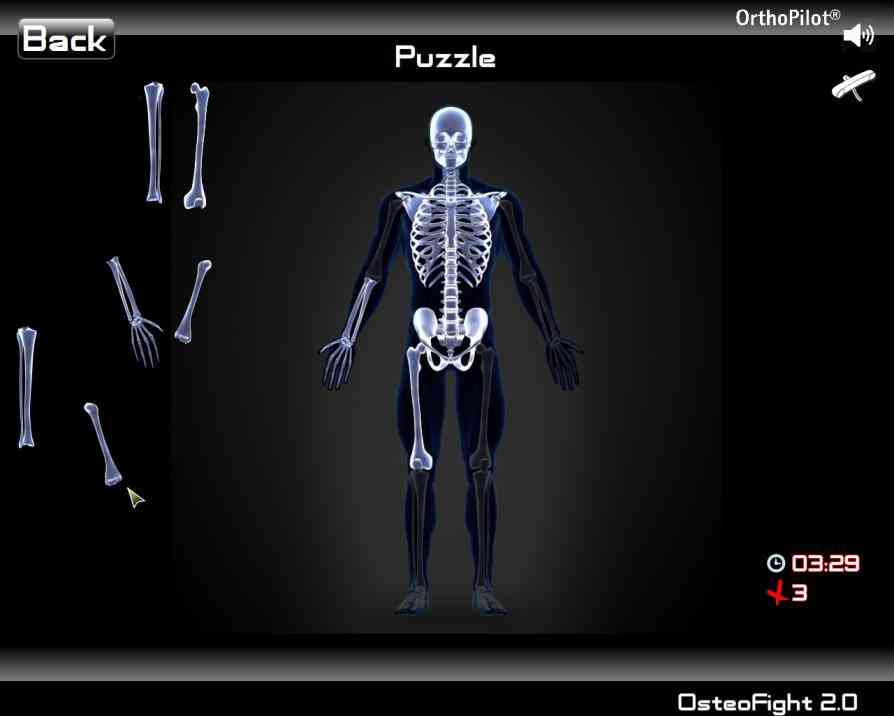

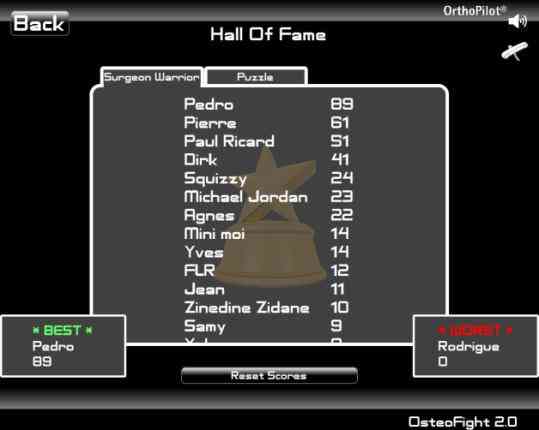

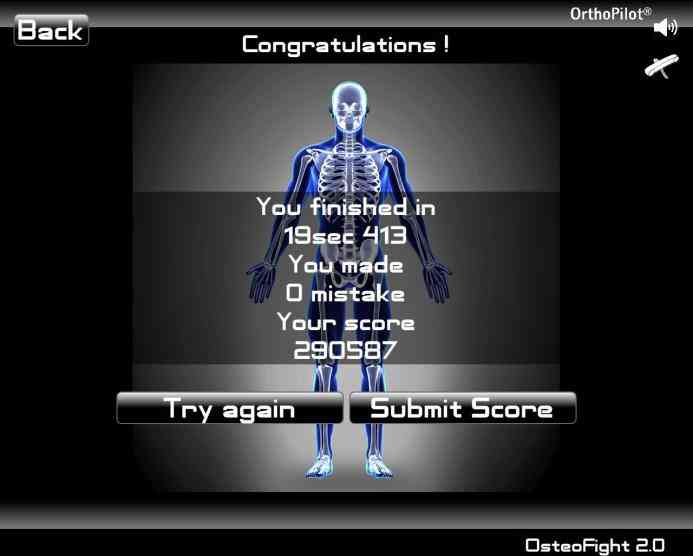

For my second year of school, I did a 11 weeks internship at B|Braun - Aesculap where I was in charge of developping two serious games for training on the OrthoPilot plateform .

The goal was to study the relevance and efficiency of Gesture Interactions for this platform.

The technologies I used for this project were: C++, Qt/QML.